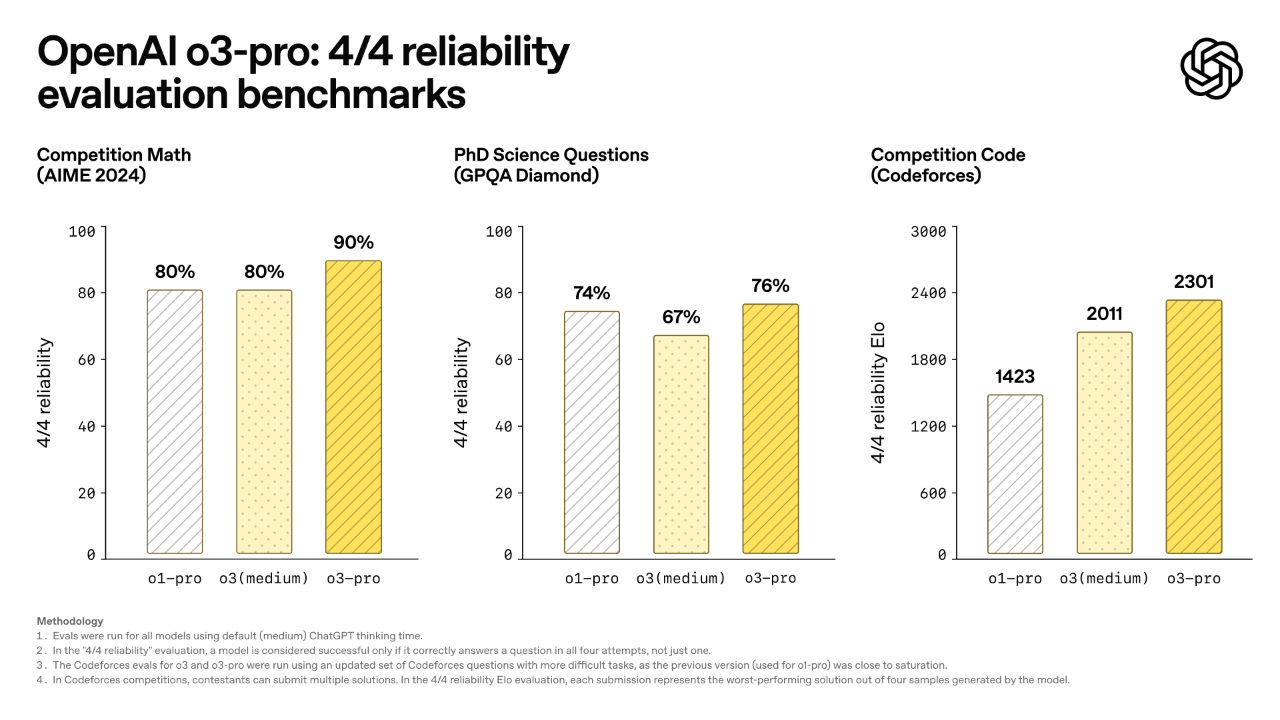

ChatGPT o3-Pro Is Out — OpenAI's Most Powerful (and Expensive) Model

Jun 13, 2025Here’s a paradox: in the world of AI, faster isn’t always better. OpenAI just dropped o3-pro, and it’s a deliberate step away from speed to embrace something more elusive—deep, contextual precision. This new tool-native version of the o3 model isn’t just another upgrade; it’s a strategic pivot. Designed for multi-step reasoning, tool use, and deliberate thinking, o3-pro is OpenAI’s high-stakes answer to the most complex enterprise-grade challenges.

But don’t expect rapid-fire responses. o3-pro is slow. By design.

Let’s break down what this means—for developers, businesses, and the future of intelligent systems.

Why OpenAI Sacrificed Speed for Precision

While most AI models chase ever-faster inference times, o3-pro takes a different route. It’s tuned for accuracy, especially in scenarios where context, uncertainty, and tool orchestration matter more than milliseconds.

What makes o3-pro different?

-

Multi-modal inputs: Accepts both text and images.

-

200,000 token context window: Ideal for lengthy documents, codebases, or multi-part reasoning.

-

Integrated tools: File analysis, vision input, web browsing (limited), and MCP (Multi-Component Processing).

-

API-first design: Available via the new

v1/responsesendpoint, allowing multi-turn conversations before returning a final answer. -

Massive output support: Up to 100,000 tokens—perfect for detailed, structured outputs or synthetic research.

Yes, it can take minutes per response. But for use cases like data audits, legal analysis, financial modeling, or advanced research synthesis, that’s a trade many are willing to make.

o3 Gets Cheaper—Way Cheaper

In a stunning pricing move, OpenAI also slashed o3’s cost by 80%, making its powerful reasoning capabilities more accessible:

-

Input: $2 per million tokens (previously $10)

-

Output: $8 per million tokens (was $40)

-

“Flex mode”: A dynamic option to balance speed and cost

-

Cacheable inputs: As low as $0.50 per million tokens

This positions o3 not just as a premium product, but a mass-market contender. In head-to-heads, it’s holding its own against Claude Opus and Gemini Pro, at a fraction of the price. For startups and solo devs who couldn’t justify the spend before, this changes everything.

The Enterprise Bet: From Chatbots to Strategic Advisors

o3-pro isn’t built for casual chatbot interactions. It's for enterprises and professionals tackling layered problems—think insurance underwriters, regulatory compliance teams, or biomedical researchers.

Use cases where o3-pro shines:

-

Legal analysis: Parsing massive contracts and suggesting changes with source justification.

-

Financial forensics: Digging into multi-sheet spreadsheets for anomalies.

-

Academic synthesis: Turning hundreds of citations into coherent, cited arguments.

-

AI-assisted coding: Not just code generation, but multi-step refactoring or dependency tracing.

While ChatGPT Pro and Team users now get o3-pro as the default, the bigger story is in API access. Enterprises can build custom workflows that leverage slow, deliberate reasoning—similar to how humans operate under pressure.

OpenAI’s Growing Appetite—and a Google Twist

Behind the scenes, OpenAI made a quiet yet seismic move: it signed a compute deal with Google Cloud. That’s right—OpenAI, historically a Microsoft-first company, is now embracing a multi-cloud strategy. Why?

Because compute is the real constraint in the AI arms race.

o3-pro uses a lot of compute. This pivot to Google signals not just scalability, but pragmatism. Even competitors are sharing silicon now. The walls between rivals are blurring in the name of performance and availability.

Meanwhile, OpenAI is reporting $10 billion in annual recurring revenue, up from $5.5B last year, driven by ChatGPT subscriptions, enterprise deals, and developer API usage. With 500 million weekly active users and 3 million business clients, its scale is breathtaking.

What's Next: The Age of Generative Cognition

CEO Sam Altman hints that we’re on the edge of something big. In his essay The Gentle Singularity, he points to 2026 as the year models may begin generating novel insights, not just echoing data. That’s what OpenAI is really optimizing for.

o3-pro is the first step in this evolution—from answering questions to thinking independently.

Even their delayed open-weight model is being retooled around this concept. By folding in their latest breakthroughs, OpenAI wants to redefine what “open” means—potentially marrying transparency with enterprise-grade reasoning.

o3-pro Is a Bet on What AI Should Be

Speed is nice. Precision is transformative.

With o3-pro, OpenAI is redefining how we think about AI value: not as fast answers, but thoughtful ones. In doing so, they’re carving out a new space for tool-integrated, deeply contextual agents that don’t just answer—they reason, choose, and act.

This isn’t a chatbot race anymore. It’s a cognition contest. And OpenAI just made its move.

Want weekly tips to grow smarter with AI?

📬 Subscribe to the newsletter and get practical advice on automation, content, and growth—straight to your inbox.

We hate SPAM. We will never sell your information, for any reason.